一、k8s containerd配置

1、环境

三台机器 系统 centos7.9

hostnamectl set-hostname k8s-master

hostnamectl set-hostname k8s-node1

hostnamectl set-hostname k8s-node2

# 10.1.1.10 master

# 10.1.1.11 node1

cat > /etc/hosts << EOF

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.1.29 k8s-master

192.168.1.4 k8s-node1

192.168.1.142 k8s-node2

EOF

2、设置

# 关闭swap

swapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab

cat > /etc/sysctl.conf << EOF

vm.swappiness=0

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

EOF

# 生效

sysctl -p

# 设置sysctl.d

cat <<EOF | tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

cat <<EOF | tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

modprobe br_netfilter

modprobe overlay

lsmod | grep -e br_netfilter -e overlay

# overlay 91659 0

# br_netfilter 22256 0

# bridge 151336 1 br_netfilter

# ipvs 设置

iptables -P FORWARD ACCEPT

yum -y install ipvsadm ipset

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

# 关闭防火墙

iptables -F

iptables -X

systemctl stop firewalld.service

# 禁用selinux

getenforce

setenforce 0

sed -i 's/^SELINUX=enforcing$/SELINUX=disabled/g' /etc/selinux/config

# 添加yum源

yum install -y wget && wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

二、集群搭建

1、安装containerd

安装

yum install containerd -y

systemctl enable containerd.service

配置

containerd config default | tee /etc/containerd/config.toml

cat > /etc/containerd/config.toml << EOF

disabled_plugins = []

imports = []

oom_score = 0

plugin_dir = ""

required_plugins = []

root = "/var/lib/containerd"

state = "/run/containerd"

temp = ""

version = 2

[cgroup]

path = ""

[debug]

address = ""

format = ""

gid = 0

level = ""

uid = 0

[grpc]

address = "/run/containerd/containerd.sock"

gid = 0

max_recv_message_size = 16777216

max_send_message_size = 16777216

tcp_address = ""

tcp_tls_ca = ""

tcp_tls_cert = ""

tcp_tls_key = ""

uid = 0

[metrics]

address = ""

grpc_histogram = false

[plugins]

[plugins."io.containerd.gc.v1.scheduler"]

deletion_threshold = 0

mutation_threshold = 100

pause_threshold = 0.02

schedule_delay = "0s"

startup_delay = "100ms"

[plugins."io.containerd.grpc.v1.cri"]

device_ownership_from_security_context = false

disable_apparmor = false

disable_cgroup = false

disable_hugetlb_controller = true

disable_proc_mount = false

disable_tcp_service = true

enable_selinux = false

enable_tls_streaming = false

enable_unprivileged_icmp = false

enable_unprivileged_ports = false

ignore_image_defined_volumes = false

max_concurrent_downloads = 3

max_container_log_line_size = 16384

netns_mounts_under_state_dir = false

restrict_oom_score_adj = false

# 注释上面那行,添加下面这行,注意pause版本

sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.8"

selinux_category_range = 1024

stats_collect_period = 10

stream_idle_timeout = "4h0m0s"

stream_server_address = "127.0.0.1"

stream_server_port = "0"

systemd_cgroup = false

tolerate_missing_hugetlb_controller = true

unset_seccomp_profile = ""

[plugins."io.containerd.grpc.v1.cri".cni]

bin_dir = "/opt/cni/bin"

conf_dir = "/etc/cni/net.d"

conf_template = ""

ip_pref = ""

max_conf_num = 1

[plugins."io.containerd.grpc.v1.cri".containerd]

default_runtime_name = "runc"

disable_snapshot_annotations = true

discard_unpacked_layers = false

ignore_rdt_not_enabled_errors = false

no_pivot = false

snapshotter = "overlayfs"

[plugins."io.containerd.grpc.v1.cri".containerd.default_runtime]

base_runtime_spec = ""

cni_conf_dir = ""

cni_max_conf_num = 0

container_annotations = []

pod_annotations = []

privileged_without_host_devices = false

runtime_engine = ""

runtime_path = ""

runtime_root = ""

runtime_type = ""

[plugins."io.containerd.grpc.v1.cri".containerd.default_runtime.options]

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes]

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc]

base_runtime_spec = ""

cni_conf_dir = ""

cni_max_conf_num = 0

container_annotations = []

pod_annotations = []

privileged_without_host_devices = false

runtime_engine = ""

runtime_path = ""

runtime_root = ""

runtime_type = "io.containerd.runc.v2"

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]

BinaryName = ""

CriuImagePath = ""

CriuPath = ""

CriuWorkPath = ""

IoGid = 0

IoUid = 0

NoNewKeyring = false

NoPivotRoot = false

Root = ""

ShimCgroup = ""

# 修改下面这行

SystemdCgroup = true

[plugins."io.containerd.grpc.v1.cri".containerd.untrusted_workload_runtime]

base_runtime_spec = ""

cni_conf_dir = ""

cni_max_conf_num = 0

container_annotations = []

pod_annotations = []

privileged_without_host_devices = false

runtime_engine = ""

runtime_path = ""

runtime_root = ""

runtime_type = ""

[plugins."io.containerd.grpc.v1.cri".containerd.untrusted_workload_runtime.options]

[plugins."io.containerd.grpc.v1.cri".image_decryption]

key_model = "node"

[plugins."io.containerd.grpc.v1.cri".registry]

config_path = ""

[plugins."io.containerd.grpc.v1.cri".registry.auths]

[plugins."io.containerd.grpc.v1.cri".registry.configs]

[plugins."io.containerd.grpc.v1.cri".registry.headers]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]

#endpoint = ["https://registry-1.docker.io"]

# 注释上面那行,添加下面三行

endpoint = ["https://docker.mirrors.ustc.edu.cn"]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."k8s.gcr.io"]

endpoint = ["https://registry.aliyuncs.com/google_containers"]

[plugins."io.containerd.grpc.v1.cri".x509_key_pair_streaming]

tls_cert_file = ""

tls_key_file = ""

[plugins."io.containerd.internal.v1.opt"]

path = "/opt/containerd"

[plugins."io.containerd.internal.v1.restart"]

interval = "10s"

[plugins."io.containerd.internal.v1.tracing"]

sampling_ratio = 1.0

service_name = "containerd"

[plugins."io.containerd.metadata.v1.bolt"]

content_sharing_policy = "shared"

[plugins."io.containerd.monitor.v1.cgroups"]

no_prometheus = false

[plugins."io.containerd.runtime.v1.linux"]

no_shim = false

runtime = "runc"

runtime_root = ""

shim = "containerd-shim"

shim_debug = false

[plugins."io.containerd.runtime.v2.task"]

platforms = ["linux/amd64"]

sched_core = false

[plugins."io.containerd.service.v1.diff-service"]

default = ["walking"]

[plugins."io.containerd.service.v1.tasks-service"]

rdt_config_file = ""

[plugins."io.containerd.snapshotter.v1.aufs"]

root_path = ""

[plugins."io.containerd.snapshotter.v1.btrfs"]

root_path = ""

[plugins."io.containerd.snapshotter.v1.devmapper"]

async_remove = false

base_image_size = ""

discard_blocks = false

fs_options = ""

fs_type = ""

pool_name = ""

root_path = ""

[plugins."io.containerd.snapshotter.v1.native"]

root_path = ""

[plugins."io.containerd.snapshotter.v1.overlayfs"]

root_path = ""

upperdir_label = false

[plugins."io.containerd.snapshotter.v1.zfs"]

root_path = ""

[plugins."io.containerd.tracing.processor.v1.otlp"]

endpoint = ""

insecure = false

protocol = ""

[proxy_plugins]

[stream_processors]

[stream_processors."io.containerd.ocicrypt.decoder.v1.tar"]

accepts = ["application/vnd.oci.image.layer.v1.tar+encrypted"]

args = ["--decryption-keys-path", "/etc/containerd/ocicrypt/keys"]

env = ["OCICRYPT_KEYPROVIDER_CONFIG=/etc/containerd/ocicrypt/ocicrypt_keyprovider.conf"]

path = "ctd-decoder"

returns = "application/vnd.oci.image.layer.v1.tar"

[stream_processors."io.containerd.ocicrypt.decoder.v1.tar.gzip"]

accepts = ["application/vnd.oci.image.layer.v1.tar+gzip+encrypted"]

args = ["--decryption-keys-path", "/etc/containerd/ocicrypt/keys"]

env = ["OCICRYPT_KEYPROVIDER_CONFIG=/etc/containerd/ocicrypt/ocicrypt_keyprovider.conf"]

path = "ctd-decoder"

returns = "application/vnd.oci.image.layer.v1.tar+gzip"

[timeouts]

"io.containerd.timeout.bolt.open" = "0s"

"io.containerd.timeout.shim.cleanup" = "5s"

"io.containerd.timeout.shim.load" = "5s"

"io.containerd.timeout.shim.shutdown" = "3s"

"io.containerd.timeout.task.state" = "2s"

[ttrpc]

address = ""

gid = 0

uid = 0

EOF

重启

systemctl daemon-reload

systemctl restart containerd

2、安装crictl

wget https://github.com/kubernetes-sigs/cri-tools/releases/download/v1.24.2/crictl-v1.24.2-linux-amd64.tar.gz

tar -zxf crictl-v1.24.2-linux-amd64.tar.gz

mv crictl /usr/local/bin/

编写配置文件

cat > /etc/crictl.yaml <<EOF

runtime-endpoint: unix:///var/run/containerd/containerd.sock

image-endpoint: unix:///var/run/containerd/containerd.sock

timeout: 10

debug: false

pull-image-on-create: false

EOF

3、安装最新的kubectl kubelet kubeadm

yum install kubectl kubelet kubeadm -y

systemctl start kubelet.service

systemctl enable kubelet.service

4、生成kubeadm默认配置文件

kubeadm config print init-defaults --kubeconfig ClusterConfiguration > kubeadm.yml

修改配置文件

1)修改imageRepository,改为aliyun的镜像仓库地址

2)修改podSubnet以及serviceSubnet,根据的自己的环境进行设置

3)设置cgroupDriver为systemd,非必要

4)修改advertiseAddress为正确ip地址或者0.0.0.0

5)修改nodeRegistration:name 为k8s-matser名称

修改后的配置文件

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 0.0.0.0

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/containerd/containerd.sock

imagePullPolicy: IfNotPresent

name: k8s-master

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: 1.25.0

networking:

dnsDomain: cluster.local

podSubnet: 10.10.0.0/16

serviceSubnet: 10.96.0.0/12

scheduler: {}

5、初始化master集群

kubeadm init --config=kubeadm.yml --upload-certs

# 如果配置错误可以使用 kubeadm reset -f 重置集群记得 node节点也要重置一下

# 打印日志

W0823 07:37:26.188671 6862 initconfiguration.go:306] error unmarshaling configuration schema.GroupVersionKind{Group:"kubeadm.k8s.io", Version:"v1beta3", Kind:"ClusterConfiguration"}: strict decoding error: unknown field "cgroupdriver"

[init] Using Kubernetes version: v1.24.0

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master] and IPs [10.96.0.1 10.1.1.10]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost master] and IPs [10.1.1.10 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost master] and IPs [10.1.1.10 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 19.504370 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

ae70a9e894995efc394ec730e11bc660f54d717c670e3cc0b5c87a6bae5705c3

[mark-control-plane] Marking the node master as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule node-role.kubernetes.io/control-plane:NoSchedule]

[bootstrap-token] Using token: abcdef.0123456789abcdef

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.1.29:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:3effc72f50d0cefff23de6b4bece8962b59bc8e57b2ab641cb0bfd36460f1c4f

记着上面的集群令牌 kubeadm join 192.168.1.29:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:3effc72f50d0cefff23de6b4bece8962b59bc8e57b2ab641cb0bfd36460f1c4f等会需要使用

6、设置.kube/config

# 想要哪个用户使用k8s就在哪个用户上面配置一下

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

7、calico网络插件安装

kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml

在master查看状态

[root@master ~]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-5b97f5d8cf-qrg87 0/1 Pending 0 2m36s

kube-system calico-node-4j8s9 0/1 Init:0/3 0 2m36s

kube-system calico-node-7vnhb 0/1 Init:0/3 0 2m36s

kube-system coredns-74586cf9b6-glsgz 0/1 Pending 0 14h

kube-system coredns-74586cf9b6-hgtf7 0/1 Pending 0 14h

kube-system etcd-master 1/1 Running 1 (54m ago) 14h

kube-system kube-apiserver-master 1/1 Running 1 (54m ago) 14h

kube-system kube-controller-manager-master 1/1 Running 1 (54m ago) 14h

kube-system kube-proxy-mq7b9 1/1 Running 1 (53m ago) 14h

kube-system kube-proxy-wwr6d 1/1 Running 1 (54m ago) 14h

kube-system kube-scheduler-master 1/1 Running 1 (54m ago) 14h

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady control-plane 14h v1.25.4

8、node加入节点

使用刚刚的集群令牌在node节点上执行加入集群,node1、node2一样

# kubeadm join 10.1.1.10:6443 --token abcdef.0123456789abcdef \

# > --discovery-token-ca-cert-hash sha256:3effc72f50d0cefff23de6b4bece8962b59bc8e57b2ab641cb0bfd36460f1c4f

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

加入后在master节点上查看状态

kubectl get nodes

node节点加入集群的令牌有效期为24小时,可以在master节点生产新的令牌

kubeadm token create --print-join-command

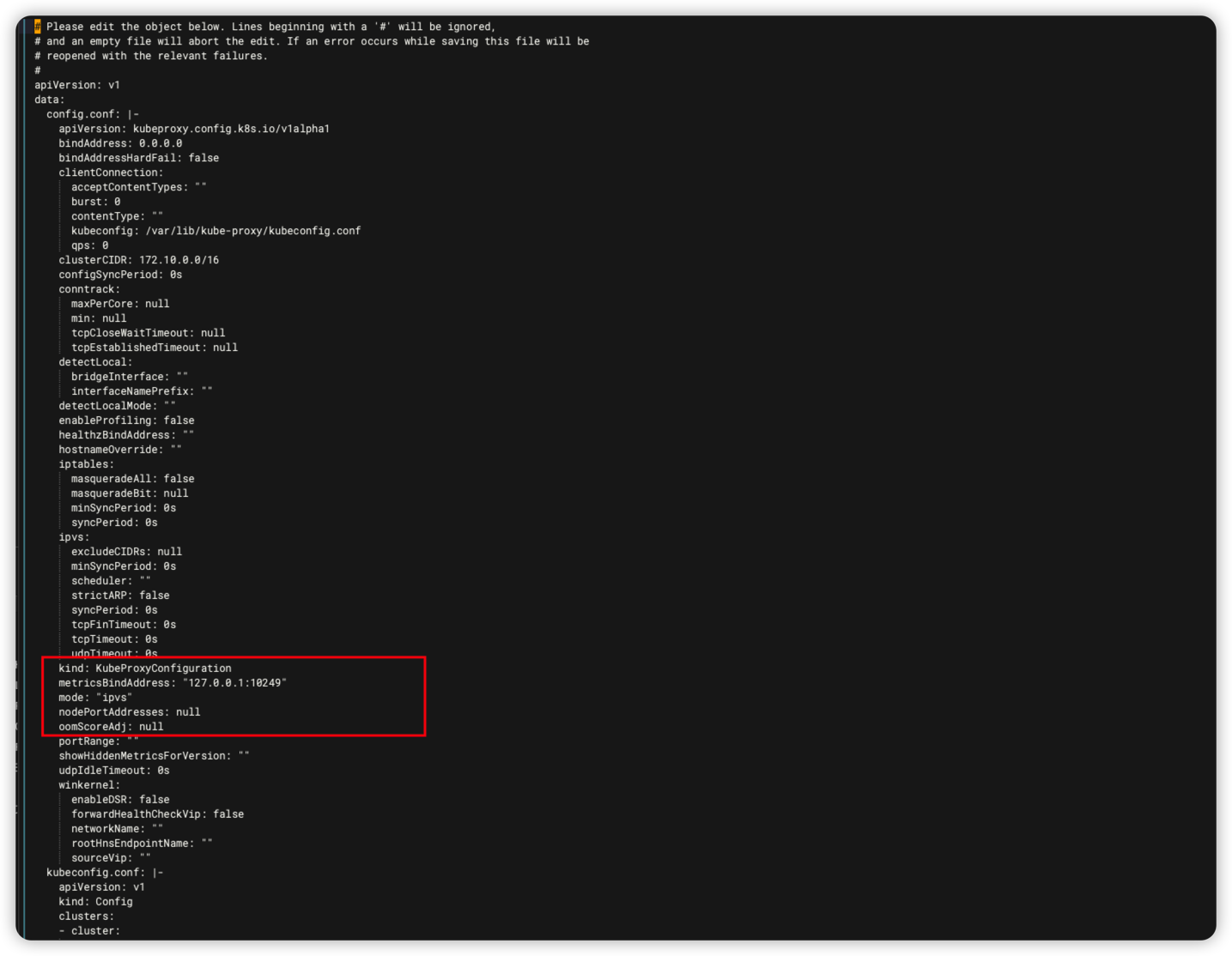

9、将k8s切换为ipvs转发模式

在master节点上修改配置

# 进入编辑模式

kubectl -n kube-system edit cm kube-proxy

找到mode:更改为mode: "ipvs"

找到metricsBindAddress: 更改为 metricsBindAddress: "127.0.0.1:10249"

将原来的kube-proxy删除,重启

将原来的kube-proxy删除,重启

kubectl -n kube-system get pod -l k8s-app=kube-proxy | grep -v 'NAME' | awk '{print $1}' | xargs kubectl -n kube-system delete pod

10、配置kubectl TAB补全功能

yum install bash-completion -y

source /usr/share/bash-completion/bash_completion

source <(kubectl completion bash)

echo "source <(kubectl completion bash)" >> ~/.bashrc

发表评论

共 0 条评论

暂无评论