准备环境

Elasticsearch:http://10.0.0.1:9200", "http://10.0.0.2:9200", "http://10.0.0.3:9200

redis:10.0.0.1 6379

kibana: 10.0.0.1 5601

更改Ingress Nginx日志格式

部署filebeat、Logstash

Ingress Nginx配置json日志格式

kubectl apply -f - <<EOF

apiVersion: v1

data:

log-format-upstream: '{"@timestamp":"$time_iso8601","domain":"$server_name","hostname":"$hostname","remote_user":"$remote_user","client":"$remote_addr","proxy_protocol_addr":"$proxy_protocol_addr","@source":"$server_addr","host":"$http_host","request":"$request","request":"$request_uri","url":"$uri","args":"$args","upstreamaddr":"$upstream_addr","status":"$status","upstream_status":"$upstream_status","size":"$body_bytes_sent","responsetime":"$request_time","upstreamtime":"$upstream_response_time","proxy_upstream_name":"$proxy_upstream_name","proxy_alternative_upstream_name":"$proxy_alternative_upstream_name","xff":"$http_x_forwarded_for","upstream_response_length":"$upstream_response_length","referer":"$http_referer","http_user_agent":"$http_user_agent","request_length":"$request_length","req_id":"$req_id","request_method":"$request_method","request_body":"$request_body","scheme":"$scheme","file_dir":"$request_filename","k8s_namespace":"$namespace","k8s_ingress_name":"$ingress_name","k8s_service_name":"$service_name","k8s_service_port":"$service_port","https":"$https"}'

kind: ConfigMap

metadata:

labels:

app: ingress-nginx

name: nginx-configuration

namespace: ingress-nginx

EOF

部署filebeat

# filebeat部署yml

---

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeat-config

namespace: kube-system

labels:

k8s-app: filebeat

data:

filebeat.yml: |-

filebeat.autodiscover:

providers:

- type: kubernetes

node: ${NODE_NAME}

hints.enabled: true

hints.default_config:

type: container

paths:

- /var/log/containers/*${data.kubernetes.container.id}.log

processors:

- add_cloud_metadata:

- add_host_metadata:

- drop_fields:

# 删除不必要的指端

fields: ["log","input","host","agent","ecs", "cloud", "container"]

setup.kibana.host: "http://10.0.0.1:5601/kibana"

setup.dashboards.enabled: true

output.redis:

hosts: ["10.0.0.1"]

key: "filebeat"

db: 11

timeout: 5

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: filebeat

namespace: kube-system

labels:

k8s-app: filebeat

spec:

selector:

matchLabels:

k8s-app: filebeat

template:

metadata:

labels:

k8s-app: filebeat

spec:

serviceAccountName: filebeat

terminationGracePeriodSeconds: 30

hostNetwork: true

dnsPolicy: ClusterFirstWithHostNet

containers:

- name: filebeat

image: docker.elastic.co/beats/filebeat:8.1.0

args: [

"-c", "/etc/filebeat.yml",

"-e",

]

env:

- name: ELASTIC_CLOUD_ID

value:

- name: ELASTIC_CLOUD_AUTH

value:

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

securityContext:

runAsUser: 0

# If using Red Hat OpenShift uncomment this:

privileged: true

resources:

limits:

memory: 200Mi

requests:

cpu: 100m

memory: 100Mi

volumeMounts:

- name: config

mountPath: /etc/filebeat.yml

readOnly: true

subPath: filebeat.yml

- name: data

mountPath: /usr/share/filebeat/data

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

- name: varlog

mountPath: /var/log

readOnly: true

volumes:

- name: config

configMap:

defaultMode: 0640

name: filebeat-config

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

- name: varlog

hostPath:

path: /var/log

# data folder stores a registry of read status for all files, so we don't send everything again on a Filebeat pod restart

- name: data

hostPath:

# When filebeat runs as non-root user, this directory needs to be writable by group (g+w).

path: /var/lib/filebeat-data

type: DirectoryOrCreate

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: filebeat

subjects:

- kind: ServiceAccount

name: filebeat

namespace: kube-system

roleRef:

kind: ClusterRole

name: filebeat

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: filebeat

namespace: kube-system

subjects:

- kind: ServiceAccount

name: filebeat

namespace: kube-system

roleRef:

kind: Role

name: filebeat

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: filebeat-kubeadm-config

namespace: kube-system

subjects:

- kind: ServiceAccount

name: filebeat

namespace: kube-system

roleRef:

kind: Role

name: filebeat-kubeadm-config

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: filebeat

labels:

k8s-app: filebeat

rules:

- apiGroups: [""] # "" indicates the core API group

resources:

- namespaces

- pods

- nodes

verbs:

- get

- watch

- list

- apiGroups: ["apps"]

resources:

- replicasets

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: filebeat

# should be the namespace where filebeat is running

namespace: kube-system

labels:

k8s-app: filebeat

rules:

- apiGroups:

- coordination.k8s.io

resources:

- leases

verbs: ["get", "create", "update"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: filebeat-kubeadm-config

namespace: kube-system

labels:

k8s-app: filebeat

rules:

- apiGroups: [""]

resources:

- configmaps

resourceNames:

- kubeadm-config

verbs: ["get"]

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: filebeat

namespace: kube-system

labels:

k8s-app: filebeat

---

# 部署filebeat

kubectl apply -f filebeat-kubernetes.yaml

部署Logstash

cat logstash.conf

# logstash配置文件

input {

# 从文件读取日志信息

redis {

id => "redis_id"

host => "10.0.0.1"

port => 6379

key => "filebeat"

data_type => "list"

db => 11

}

}

filter {

# 删除不必要的字段

mutate {

remove_field => ["orchestrator"]

remove_field => ["event"]

remove_field => ["[kubernetes][replicaset]"]

remove_field => ["[kubernetes][namespace_labels]"]

remove_field => ["[kubernetes][node][labels]"]

remove_field => ["[kubernetes][labels]"]

remove_field => ["[kubernetes][namespace_uid]"]

remove_field => ["[kubernetes][node][hostname]"]

remove_field => ["[kubernetes][node][uid]"]

remove_field => ["[kubernetes][labels]"]

remove_field => ["[kubernetes][pod][uid]"]

}

# 通过kubernetes deployment名称 判断是否是ingress-nginx

if [kubernetes][deployment][name] == "ingress-nginx-controller-web-nginx" {

# 解析message json日志

json {

source => "message"

#target => "doc"

remove_field => ["message"]

}

}

# 判断remote_addr 是否有值

if [client] {

# 使用geoip 解析客户端IP地址

geoip {

source => "client"

target => "geoip"

}

mutate {

# 更改字段属性

convert => ["size", "integer"]

convert => ["status","integer"]

convert => ["upstream_status","integer"]

convert => ["responsetime","float"]

convert => ["upstreamtime","float"]

}

useragent {

# 通过http_user_agent 解析 使用浏览器 客户端设备

id => "useragent_1"

source => "http_user_agent"

target => "ua"

}

}

}

output{

elasticsearch{

id => "elasticsearch_1"

hosts => ["10.0.0.1:9200","10.0.0.2:9200","10.0.0.3:9200"]

index => "logstash-%{+yyyy.MM.dd}"

}

}

# 将logstash.conf内容创建为名为logstash.conf的ConfigMap

kubectl create configmap logstash --from-file=./logstash.conf -n efk

创建logstash.yml 文件

记得修改initContainers IP地址

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: logstash

namespace: efk

labels:

k8s.kuboard.cn/layer: cloud

k8s.kuboard.cn/name: logstash

annotations:

k8s.kuboard.cn/displayName: logstash

spec:

replicas: 1

selector:

matchLabels:

k8s.kuboard.cn/layer: cloud

k8s.kuboard.cn/name: logstash

template:

metadata:

creationTimestamp: null

spec:

volumes:

- name: volume-tm7fz

configMap:

name: logstash

items:

- key: logstash.conf

path: logstash.conf

defaultMode: 420

initContainers:

- name: redis-busybox

image: busybox

command:

- sh

args:

- '-c'

- >-

until nc -zvw3 10.0.0.1 6379; do echo

waiting for redis; sleep 2; done;

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

imagePullPolicy: IfNotPresent

- name: es-busybox

image: busybox

command:

- sh

args:

- '-c'

- >-

until nc -zvw3 10.0.0.1 9200; do echo

waiting for es; sleep 2; done;

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

imagePullPolicy: IfNotPresent

containers:

- name: logstash

image: 'logstash:8.1.0'

resources: {}

volumeMounts:

- name: volume-tm7fz

readOnly: true

mountPath: /usr/share/logstash/pipeline/logstash.conf

subPath: logstash.conf

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

imagePullPolicy: IfNotPresent

restartPolicy: Always

terminationGracePeriodSeconds: 30

dnsPolicy: ClusterFirst

securityContext: {}

schedulerName: default-scheduler

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 25%

maxSurge: 25%

revisionHistoryLimit: 10

progressDeadlineSeconds: 600

# 部署logstash

kubectl apply -f logstash.yaml

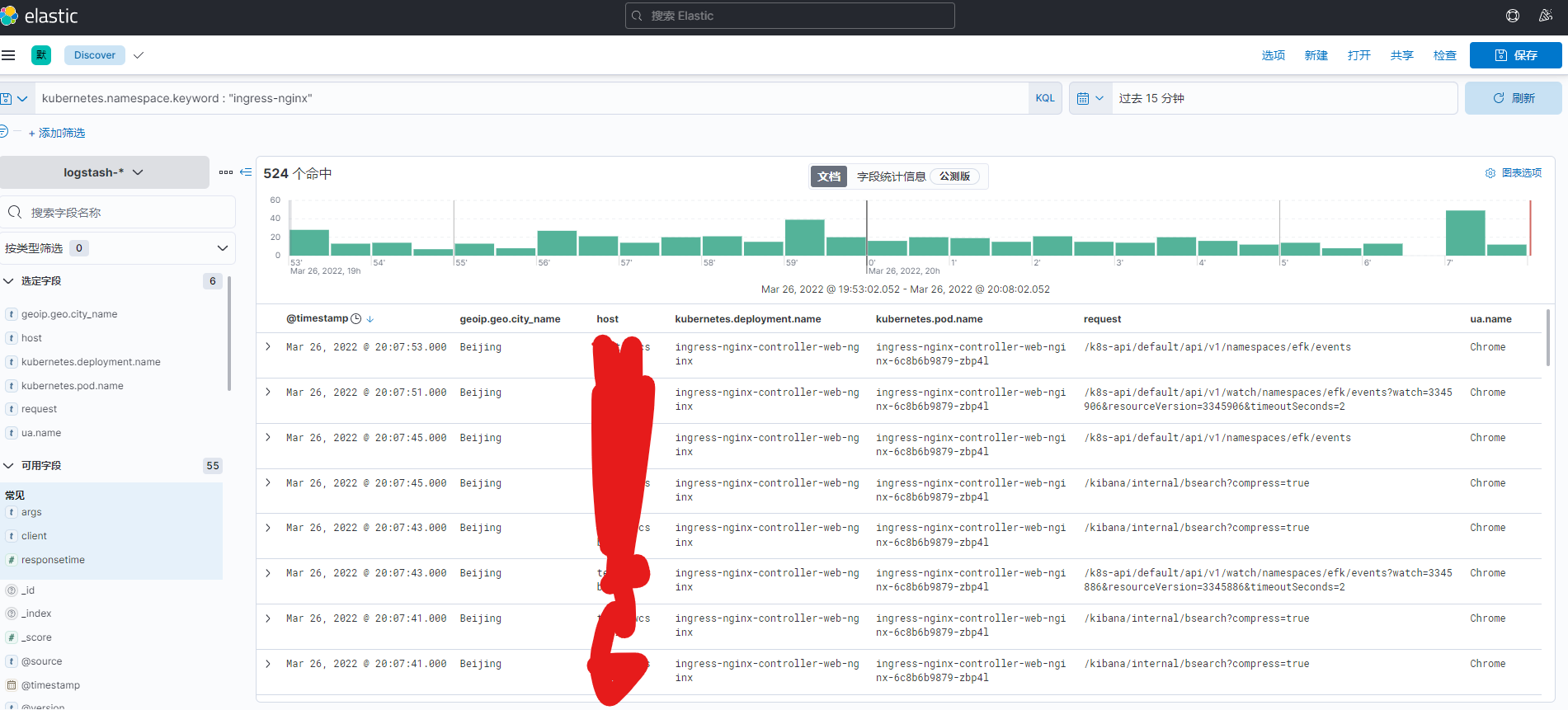

使用kibana 验证

发表评论

共 0 条评论

暂无评论